You probably remember that our first experience with FreeSync was, to say the least, disappointing. We would like to remind you that behind the name FreeSync lies AMD’s answer to Nvidia’s G-Sync, that is to say a technology for the handling of variable display refresh rates. The idea behind this concept is that it helps to improve the game-play experience by providing an increased sensation of fluidity. How is FreeSync doing these days you might ask? As long as the proper monitor is used, it actually works quite well and costs quite a bit less than G-Sync!

After much fanfare in January 2014, the arrival of the first FreeSync compatible monitor had to wait until March of 2015. And, unfortunately, their performance was far from impressive. On paper, the advantages offered by this technique of monitor management were many fold, starting with the fact that it relied on an open technology standard which is much less costly than that of Nvidia’s G-Sync. However, entry-level monitors which didn’t provide the required fluidity left us feeling disappointed with the whole concept.

Adaptive-Sync, FreeSync and G-Sync

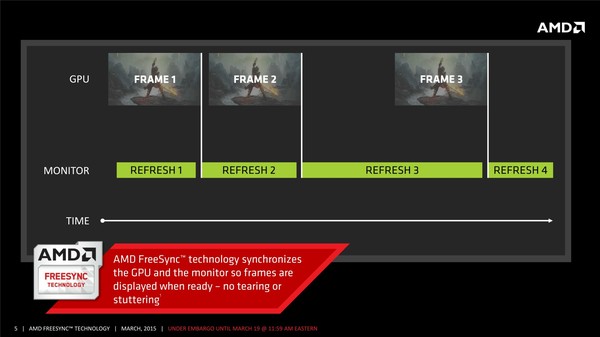

Under the banner of these three terms, or brands if you prefer, lies the technology for the management of variable refresh rates (VRR). A classic fixed refresh rate of 60 Hz will offer an imperfect visual experience when playing video games. As each image is different, it follows that the time required for the calculation of each image also differs. This discrepancy results in synchronization problems. While a higher refresh rate, of 144 Hz for example, decreases the impact of the problem it does not eliminate it entirely. The graphics card might miss a display cycle, spend too much time waiting for the next cycle or disregard a cycle entirely, all of which will result in image breakup (tearing).

The principle behind VRR is quite simple: since the graphics card has to bend over backwards to accommodate the refresh rate of the monitor, why not make the monitor accommodate itself to the video card instead? The concept seems obvious, but its implementation required a great deal of effort in the redesign of monitor since it involves displaying images for intervals of time which need to be as precise as they are variable.

G-Sync: the first of its kind?

The principle of VRR for the improvement of fluidity in game-play was first made public and exploited by Nvidia with its G-Sync system, but it is difficult to determine whether they were truly the first to come up with the concept. On the one hand, Nvidia claims to have worked on the problem for many years, but on the other hand it has come to light that the VESA consortium had been working on a similar approach at around the same time – with the distinction that it was being standardized for DisplayPort. This has become Adaptive-Sync, a standard proposed by AMD which truly pushes this technology to the forefront through their graphics cards and drivers.

The exact chronology of these developments is hard to determine. AMD and Nvidia have tried to say as little about it as possible, all the while fiercely defending their contributions to the field. It is entirely possible that AMD urged the VESA consortium to develop their standard after learning about the G-Sync project. It is equally possible that, based on preliminary discussions with VESA members, Nvidia saw the opportunity to undercut the competition by implementing a proprietary standard designed to reduce the development time for monitor manufacturers.

At any rate, it is Nvidia’s G-Sync which has allowed VRR to become a reality since 2013 and which has inspired AMD to accelerate its rate of development. In retrospect, it is also clear that the expensive, proprietary solution created by Nvidia has allowed the whole industry to advance more rapidly. After an initial demonstration of FreeSync, in January of 2014, it took until March of 2015 for the first compatible displays to arrive on the market and only until December of that same year for FreeSync to start functioning correctly thanks to better monitors and improved drivers. In the end Nvidia only gained 2 years over its competition.

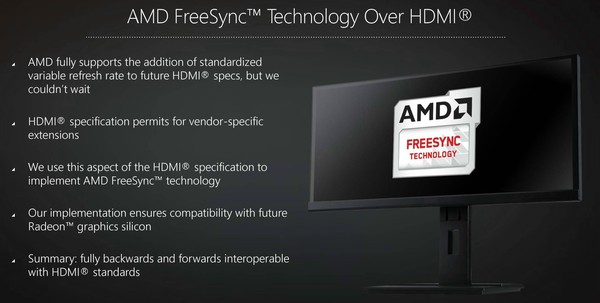

It should be noted that while FreeSync exploits the Adaptive-Sync standard through DisplayPort, AMD is also proposing a proprietary implementation which operates through HDMI. This implementation works in a similar way to Adaptive-Sync, but is not part of the HDMI standard for the moment. This is an approach which will increase the number of displays compatible with FreeSync.

FreeSync and G-Sync, what are the differences between them?

If the operation of these two technologies is similar, their implementation is significantly different, something we already mentioned during their respective testing. We would simply like to remind you that as far as G-Sync (for desktops) is concerned, Nvidia’s strategy is to control both the monitor as well as the graphics card; for this purpose they have developed a specific module to be integrated into monitors. This G-Sync module relies on a programmable FPGA type processor coupled with a buffer. This reliance on hardware translates into high cost. FreeSync on the other hand relies on Adaptive-Sync technology, a standard which can be handled by most recent scalers. These are small, inexpensive, mass-produced controllers.

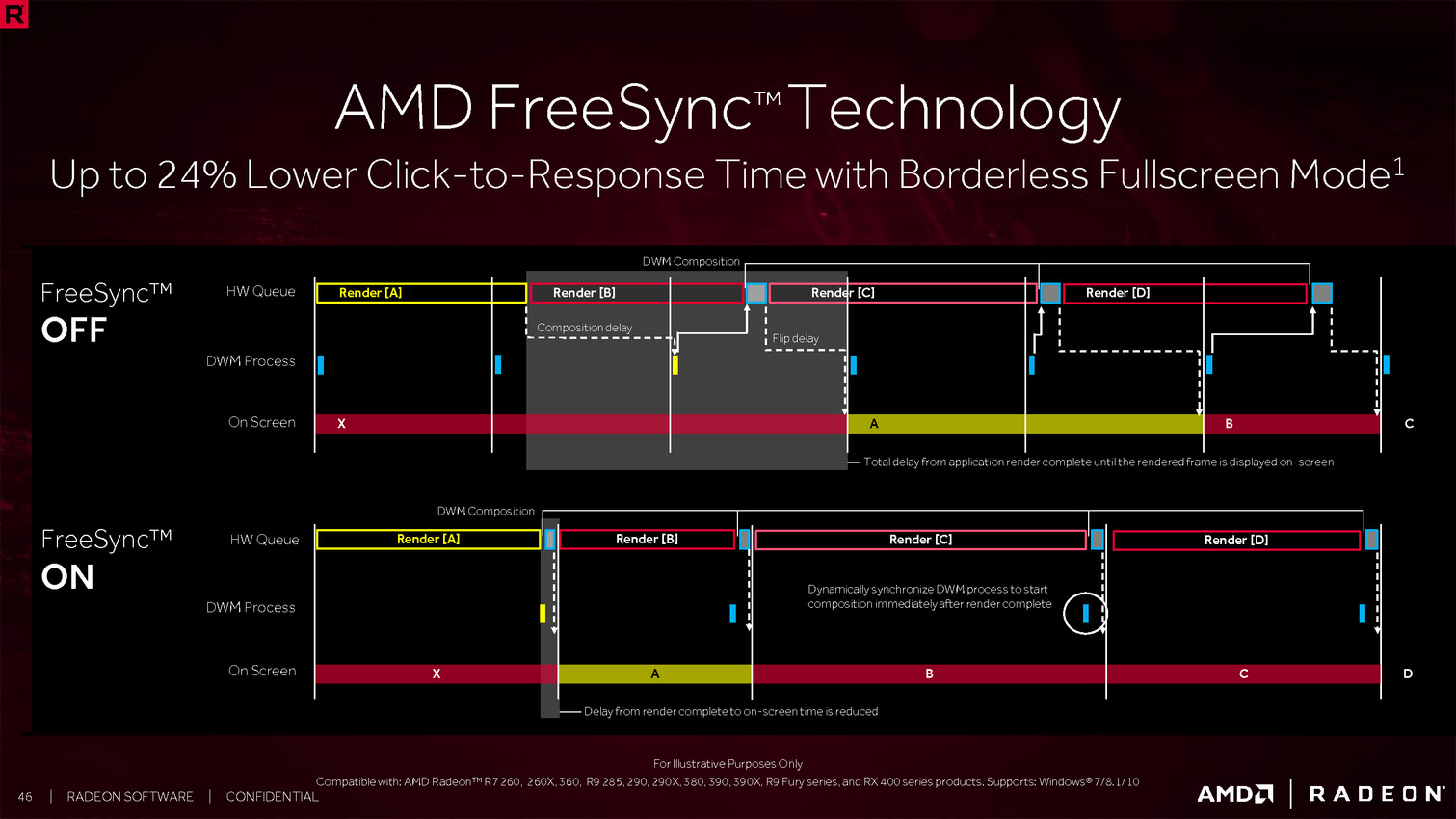

Nvidia explains that G-Sync (for desktops) works in an explicit way, that is to say that the graphics card communicates with the display (polling) to guarantee that it is ready to receive each image. FreeSync, on the other hand, and Adaptive-Sync work in an implicit way: the display communicates to the graphics card the range of refresh rates it is capable of handling. The graphics card and the drivers determine how best to manage the monitor of information. This subtle difference may seem unimportant but it has had a fundamental impact on the respective way in which both Nvidia and AMD have come to offer fluidity at low FPS.

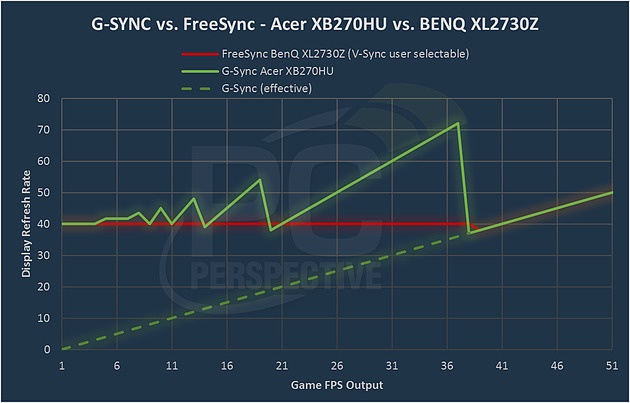

If our first impressions about FreeSync were mixed, it was for two main reasons. First of all, the phenomenon know as ghosting was poorly handled by the first monitor that we tested. Secondly, the fluidity suffered enormously once the FPS level dropped below the lowest refresh rate that the monitor was able to handle.

This last problem was particularly frustrating since on many of the first FreeSync compatible displays – and on many of the display available today – the lower refresh rate limit was not very low at all: 40 to 48 Hz is still commonplace. And, when we are aiming for an average frame rate of around 60 Hz, it is not uncommon to drop briefly below 48 or even 40 FPS. This occurrence will destroy the whole experience of fluidity we are aiming for.

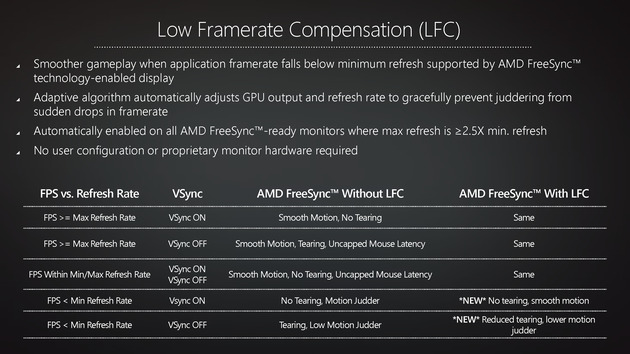

G-Sync compatible displays work, in general, with a lower limit of 30 Hz which requires the display panels to be of high-quality so as not to have problems displaying colors or brightness at very low refresh rates. Nothing is preventing FreeSync from using better display panels, but doing so would come with increased cost. What’s more, even at rates below 30 Hz, G-Sync monitors are able to avoid jerkiness.

As our colleagues at PCPerspective have put forward, with the help of an oscilloscope, Nvidia has faced the problem head-on by making use of a technology which seems self-evident. If the display is limited to 30 Hz, what can be done when the frame rate goes down to 29 FPS? The solution is to switch to 58 Hz and to display the image twice. And at 14 FPS? Here again, just switch to 42 Hz and display the image three times, and so on. This approach allows for the simulation of a display with a lower refresh rate limit of 1 Hz (even if in reality Nvidia does not go that far). With this technique it is also possible to maintain fluidity for as long as the frame rate itself allows it.

It is the G-Sync module which is in charge of holding the last image in its buffer and displaying it as many times as may be necessary, with a certain margin of error. For example, if the frame rate is around 29-30 FPS, the module is calibrated by Nvidia in such a way that the display stays at a refresh rate of around 58-60 Hz while doubling all the images – instead of oscillating between 30 and 58 Hz. Even though we were not able to verify this in reality, we presume that extreme variations in refresh rate will cause artifacts such as flickering. Whatever the case, this aspect of the control of the display by the G-Sync module is probably the reason for which the graphics card has to be constantly querying whether the display is ready to receive a new image. This communication has an impact on performance and latency, but it is negligible.

LFC, three crucial letters to accompany FreeSync

In its beginning, FreeSync only engaged the VRR when the frame rate was within the range of refresh rates which the display was able to handle. Below this range, and according the game-players options, the system defaulted to a fixed rate with either a V-Sync ON state and a high degree of flickering or a V-Sync OFF state and less flickering but more tearing. The general impression was very disagreeable when departing from optimal fluidity.

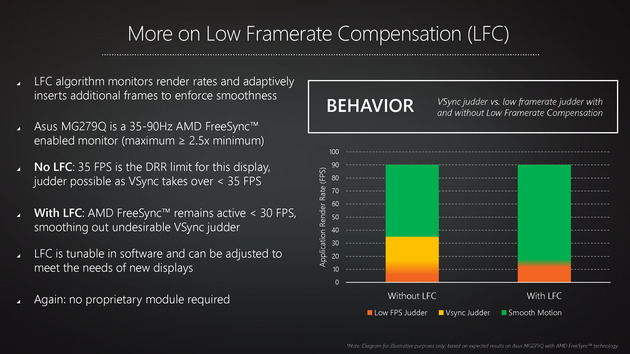

It was some time before AMD decided to attack this albeit fundamental problem. The Crimson Edition drivers, released just over a year ago, introduced a new functionality named LFC for Low Framerate Compensation. As the name suggests, it concentrates on producing fluidity at low frame rates, rates which are below what can be handled by the VRR. As opposed to G-Sync, it is the graphics card coupled with the drivers which will determine at any given time whither to double, triple or quadruple the images in order to simulate a larger VRR range.

The LFC algorithm, as it was first introduced by AMD, activated – in principle – automatically once the the ratio between the VRR’s lower and higher limits was at least 2.5x (without modification of the display’s firmware). This ratio was calculated to provide a sufficient margin of error to prevent variations in frame rate which may be prove to be too extreme and also to provide the requisite time necessary for the deactivation of the LFC mode.

From our perspective, this LFC mode was, to begin with, not always functional with all games. The situation improved with the release of new drivers 2-3 months after its introduction – even though AMD claims not to have corrected any bugs specifically relating to FresSync. It is not impossible that the fixing of another bug indirectly resolved the problem. Since March 2016, thanks to the now totally functional LFC mode, we are finally experiencing results worthy of our expectations for FreeSync.

It is worth mentioning that even though AMD still mentions a ratio of at least 2.5x for the automatic activation of the LFC mode, some modern displays are supporting it with a ratio of 2.14x or even 2.08x. We don’t know if the drivers have lowered their margin of error or if the displays go through an extra validation process during which they report to the drivers whether they can engage the LFC mode despite a ratio lower than 2.5x.

It is clear that with a ratio lower than 2x, it is impossible to employ the technique of double image display. This is also true for G-Sync. To the best of our knowledge Nvidia imposes a range of at least 30-60 Hz.

FreeSync, it works!

Several times after our initial, less than encouraging article about the Acer XG270HU and its first firmware, we have wanted to revisit the question of FreeSync. To do so, we turned towards an Asus MG279Q as well as towards a commercial version of the BenQ XL2730Z equipped with the corrected firmware. If ghosting was no longer a problem on the Acer display, various problems still persisted until the arrival of the LFC mode and for numerous reasons we kept putting off publishing our article.

Better late than never. After the release of the Crimson ReLive Edition, and before going into more detail about the middle-level product lines offered by both AMD and Nvidia, we decided to revisit the question. Although since March the fluidity was already at the desired level, some problems still persisted mainly because AMD had not yet implemented FreeSync support for borderless, full-screen display modes (as opposed to Nvidia who had already implemented this support quite some time ago).

It is however functional with the latest drivers and we have not since detected any situations which have caused any problems. All the tested games are perfectly fluid as much with G-Sync as with FreeSync and it is definitely hard to give up one of these displays for an old-fashioned 60 Hz model.

The BenQ XL2730Z is a TN display which provides a VRR range from between 40 to 144 Hz at 1440p resolution. At 550€ it is not cheap, and TN technology is not for everyone, but with the LFC mode and a ghosting reduction system, it offers excellent results for gamers.

The Asus MG279Q is also a 1440p 144 Hz display, but it employs IPS technology. It is a little more expensive – expect to pay 549$. It is, on the other hand, more flexible than a TN display. While it is a 144 Hz display, it is limited to a range of between 35 and 90 Hz when FreeSync is active, which allows it to take advantage of the LFC mode and achieve good fluidity.

Still, it would be nice to be able to use frame rates all the way up to the to the 144 Hz limit. We don’t know exactly where this limitation in the range originates: from the controller or from the display? But, don’t despair, it is possible to to modify this limit!

As was explained above, FreeSync works in an implicit way based on the EDID information reported by the display. The system can be tricked by replacing these values with other ones and the graphics card will apply them presuming that they correspond to the display. A simple tool such as CRU (Custom Resolution Utility) allows for the modification of the FreeSync range of refresh rates. Of course, not everything can be tweaked, and it is better to seek out information in specialized forums before attempting modifications to your display which may be potentially hazardous. In the case of the MG279Q, we were able to change the range from 35-90 Hz to 60-144 Hz without difficulty while still being able to take advantage of the LFC mode below 60 FPS.

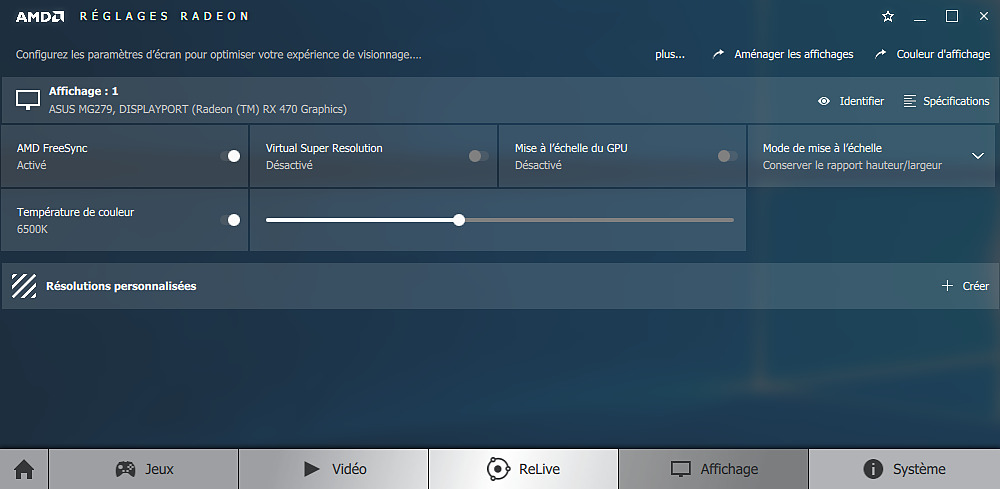

The ‘Display’ page of the Radeon drivers allows for the activation of FreeSync if it has not been activated by default. FreeSync also needs to be activated in the OSD of certain displays. But be careful, because this does not guarantee that FreeSync is really working. Verifying it with the demonstration tool provided by AMD is also no guarantee since it bypasses the options configured in the drivers. The only easy way to guarantee that it is working is by observing the variation of refresh rate in real-time, something that certain displays allow for through their OSD.

FreeSync and its price: does Radeon have the advantage? Yes!

FreeSync works just as well as G-Sync, as we were able to see, and allows for the production of lower-cost displays by taking advantage of mass-produced controllers. Is this enough to give Radeon the advantage because of its lower price? This is certainly the case, but let’s take this answer with a grain of salt.

Unfortunately, after a very long wait, and in order to convince their production partners, AMD has probably lowered the standards required in order for a display to receive FreeSync certification. Simply put, at a certain point they probably decided that any display with Adaptive-Sync support would be good enough.

This lack of sufficient minimal requirements has resulted in a situation where there are many FreeSync displays on the market which are not really cut out to deliver constant fluidity during game-play. Many models, namely those by Samsung, satisfy themselves with a range of between 48 to 72 Hz and do not even support the LFC mode. It is a large-scale joke which harms the FreeSync brand, but which is not easy to rectify especially since many manufacturers are not keen on implementing support for the LFC mode.

According to our research, only FreeSync displays with LFC mode support are worth any consideration for gamers. If a FreeSync display for 125€ can seem appealing (LG 22MP68VQ-P), its VRR range of between 56 à 75 Hz and its lack of LFC mode support will not provide a better gaming experience than would a non-FreeSync display.

Supporting FreeSync with the aim of really guaranteeing fluidity requires using a high-quality display panel which costs more. For example an iiyama, 24” 1080p display with a VRR range of between 55-75 Hz, without LFC mode support costs 165€ (G-MASTER GE2488HS-B2 Black Hawk) while a similar model with a range of 35-120 Hz and LFC mode support costs 300€ (G-MASTER GB2488HSU-B2 Red Eagle). The model numbers are almost the same and the cost difference between them is quite significant, but only one of them allows for the full enjoyment of FreeSync. This makes it hard for the consumer to get their bearings, especially since the VRR range is not always clearly indicated and support for the LFC mode is hardly ever mentioned.

AMD has place on its FreeSync page a list which specifies for every display its precise VRR range and whether it provides LFC mode support – for both DP and HDMI connectivity (in some cases investing in a DP cable will dramatically improve the fluidity of the display!). This list is however not perfectly up-to-date and it would be nice if AMD was more active in this regard, especially since Samsung seems to be coming out with an endless number of new models.

You will probably have understood by now that you should avoid the lowest-priced FreeSync displays. In the end is a FreeSync display with LFC support cheaper than a G-Sync display? The answer is yes! And not only by just a little bit, but by 150 to 200 dollars from the examples we have seen. There may however be some elements which justify the difference in price:

- Acer 24″ 1080p FreeSync 48-144 Hz (XF240H) : 199$

- Acer 24″ 1080p G-Sync 144 Hz (Predator XB241H) : 400$ (+200$)

- AOC 24″ 1080p FreeSync 35-144 Hz (G2460PF) : 209$

- AOC 24″ 1080p G-Sync 144 Hz (G2460PG) : 409$ (+200$)

- Acer 27″ 1440p FreeSync 40-144 Hz (Predator XG270HU) : 457$

- Acer 27″ 1440p G-Sync 165 Hz (Predator XB271HU) : 783$ (+326$)

For 4K monitor it is a little bit more complicated however, and no current FreeSync display has LFC mode support or offers a wide range of refresh rates. This is true with the exception of the Acer BX320HK (35-60 Hz) which costs less than 1000€. 4K FreeSync displays usually provide too narrow a range of refresh frequencies, from between 40 to 60 Hz. The few 4K G-Sync displays therefore have an advantage due to their wider range of between 30 to 60 Hz.

If you don’t currently own a G-Sync / FreeSync display and are thinking about buying one in the near future, the difference in price between these two systems is definitely a factor to take into consideration, it may even be a decisive factor, especially when considering entry-level to mid-level products. A Radeon RX 480 8 GB and FreeSync display bundle will offer a 200€ savings when compared to a similar package offered by Nvidia: a GeForce GTX 1060 6 GB and a G-Sync display! For the same amount of money, you would have to make a choice between giving up fluidity or speed if you were to opt for the Nvidia option.

You might be asking yourself why hasn’t Nvidia decided to offer support for Adaptive-Sync in addition to its proprietary module? By all appearances, their graphics cards would be capable of doing so, even if the results might be slightly inferior to those of the competition. So why allow AMD to have such an advantage?

We assume that it is due to a long thought-out commercial strategy on the part of Nvidia who dominates the “gamer” graphics card market. With G-Sync, Nvidia is not looking to distinguish itself from the competition in order to acquire more market share, but rather to offer something special in its high-end product line. The aim is to offer extra comfort to gamers who are ready to dole out the extra money to have the best possible gaming experience; this strategy also locks the gamer into Nvidia products by forcing them to buy a G-Sync compatible display..

It would have been counterproductive for Nvidia to move the market towards lower-cost G-Sync displays which would not provide them with any additional profit. Instead, they have been able to convince some gamers to satisfy themselves with less powerful graphics cards or to put-off upgrading their graphics card at all. The situation is the complete opposite where AMD is concerned. They are looking to convince gamers to trust them (again).

Although Nvidia’s strategy can be called logical, it is however, regretful since it has lead to the splitting into two factions of the display market geared towards gamers. This situation could change, but it won’t until the FreeSync system starts to dominate the graphics card market.

It is worth mentioning that even without G-Sync or FreeSync, 144 Hz displays already allow for an improvement in fluidity even when the frame rate approaches 60 FPS since they reduce any eventual lag that may occur during synchronization. The results are obviously not as good as with the FRV mode, but in its default mode a 144 Hz display is certainly better than a 60 Hz model. For some time now, all 144 Hz displays are either G-Sync or FreeSync compatible. It would be counterproductive to hook an expensive G-Sync display up to a Radon card, however linking a FreeSync display with a GeForce card could be an interesting compromise. Without spending too much, you would gain the possibility of achieving perfect fluidity with an eventual upgrade to a Radeon card. This is why it is to AMD’s advantage that a maximum number of displays adopt FreeSync support, even if everyone who buys them is not yet using FreeSync.

We are looking forward to the next CES in January where we expect some announcements to be made concerning the future trajectory of the FreeSync and G-Sync technologies, but any changes would probably be qualitative and limited to their high-end products.

Read More:

Best Monitor for Photo Editing

Best Gaming Laptop